4 Vestibular contributions to various aspects of the bodily self

The aim of this section is to describe several mechanisms by which the vestibular system might influence multisensory mechanisms underlying the bodily self. Again, we would like to stress that the vestibular system seems of utter importance for the most minimal aspects of self-consciousness (i.e., the sense of location in a spatial reference frame) (Windt 2010; Metzinger 2013, 2014) but at the same time also contributes to our rich sense of a bodily self in daily life. We will try to include both aspects in the following section. We further point out that while some mechanisms of a vestibular contribution to the sense of a self are now accepted, others are still largely speculative. We start by pinpointing the influence of the vestibular system on basic bodily senses such as touch and pain (section 4.1, which are subjectively experienced as bodily, i.e., as coming from within one’s own bodily borders, and thus importantly contribute to a sense of bodily self. We then outline evidence for a vestibular contribution to several previously identified and experimentally modified components of the multisensory bodily self: body schema and body image, body ownership, agency, and self-location (sections 4.2–4.5). On the basis of recent data on self-motion perception in a social context and on the existence of shared sensorimotor representations between one’s own body and others’ bodies, we propose a vestibular contribution to the socially embedded self (section 4.6).

4.1 The sensory self

4.1.1 Touch

The feeling of touch, as its subjective perception is confined within the bodily borders, is considered as crucial for the feeling of ownership and other aspects of the bodily self (Makin et al. 2008) and a loss of somatosensory signals has been associated with a disturbed sense of the bodily self (e.g., Lenggenhager et al. 2012). Vestibular processes have been shown to interact with the perception and location of tactile stimulation. Clinical studies in brain-damaged patients suffering from altered somatosensory perceptions showed transient improvement of somatosensory perception during artificial vestibular stimulation (Kerkhoff et al. 2011; Vallar et al. 1990). Furthermore, studies in healthy participants showed that caloric vestibular simulation can alter conscious perception of touch (Ferrè et al. 2011), probably due to interfering effects in the parietal operculum (Ferrè et al. 2012). A recent study further suggests that vestibular stimulations not only modify tactile perception thresholds, but also the perceived location of stimuli applied to the skin (Ferrè et al. 2013), a finding likely related to a vestibular influence on the body schema (see section 4.2).

Behavioural evidence of vestibulo-tactile interactions is in line with both human and animal physiological and anatomical data. Human neuroimaging studies identified areas responding to tactile, proprioceptive, and caloric vestibular stimulation in the posterior insula, retroinsular cortex, and parietal operculum (Bottini et al. 1995; Bottini et al. 2001; Bottini et al. 2005; zu Eulenburg 2013). Electrophysiological recordings in monkeys revealed a vestibulo-somesthetic convergence in most of the PIVC neurons. Bimodal neurons in the PIVC have large somatosensory receptive fields often located in the region of the neck and respond to muscle pressure, vibrations, and rotations applied to the neck (Grüsser et al. 1990b).

To date, the influence of caloric vestibular stimulation on somatosensory perception has been measured at the level of peripheral body parts only (e.g., the capacity to detect touch applied to the hand, or to locate touch on the hand), but not on more central body parts or the entire body. Here, we propose that vestibular signals are not only important for sensory processes and awareness of body parts, but even more for full-body awareness. This hypothesis is supported by findings from mapping of the posterior end of the lateral sulcus in rhesus monkey that revealed neurons in the granular field of the posterior insula with large and bilateral tactile receptive fields (Schneider et al. 1993). The range of stimuli used included brushing and stroking the hair, touching the skin, muscles and other deep structures, and manipulating the joints. Importantly, the authors noted that some neurons had receptive fields covering the entire surface of the animal body, excluding the face. As can be seen in figure 4C, those neurons (red dots) were located in the most posterior part of the insula. Functional mapping conducted in the dorsal part of the insula in other monkey species has also identified neurons with large and sometimes bilateral tactile receptive fields (Coq et al. 2004) (figure 4D). So far, there is no direct evidence that neurons with full-body receptive fields receive vestibular inputs, probably because to date few electrophysiological studies have directly investigated the convergence of vestibular and somatosensory signals in the lateral sulcus (Grüsser et al. 1990a; Grüsser et al. 1990b; Guldin et al. 1992). We hypothesize that caloric and galvanic vestibular stimulation, as well as physical head rotations and translations, are likely to interfere with populations of neurons with whole-body somatosensory receptive fields and therefore may strongly impact full-body awareness. Indeed, in daily life the basic sense of touch, especially regarding large body segments, should be crucial to experience a bodily self. While full-body tactile perception hasn’t been directly assessed during vestibular stimulation, the fact that caloric vestibular stimulation in healthy participants as well as acute vestibular dysfunction can evoke the feeling of strangeness and numbness for the entire body might point in this direction (see Lopez 2013, for a review).

4.1.2 Pain

Similar to touch, the experience of pain has been described as crucial to self-consciousness and the feeling of an embodied self. In his book “Still Lives—Narratives of Spinal Cord Injury” (Cole 2004), the neurophysiologist Jonathan Cole reports the case of a patient with a spinal cord lesion who described that “the pain is almost comfortable. Almost my friend. I know it is there, it puts me in contact with my body” (p. 89). This citation impressively illustrates how important the experience of pain might be in some instances for the sense of a bodily self. Reciprocal relations between pain and the sense of self are further supported by observations of altered pain perception and thresholds during dissociative states of bodily self-consciousness, such as depersonalization (Röder et al. 2007), dissociative hypnosis (Patterson & Jensen 2003) and out‐of‐body experiences (Green 1968). Similarly, acting in an immersive virtual environment is also associated with an increase in pain thresholds (Hoffman et al. 2004), a fact that is now increasingly exploited in virtual reality based pain therapies. This increase in pain threshold depends on the strength of feeling of presence in the virtual environment, i.e., the sense of “being there,” located in the virtual environment (Gutiérrez-Martínez et al. 2011; see also section 4.5.2.1). These analgesic effects of immersion and presence in virtual realities are usually explained by attentional resource mechanisms (i.e., attention is directed to the virtual environment rather than the painful event). Yet, all described instances involve also illusory self-location which has shown in full-body illusions to be accompanied by an increasing in pain thresholds or altered arousal response to painful stimuli (Hänsel et al. 2011; Romano et al. 2014). We thus speculate that analgesic effects of immersion could also be linked to disintegrated multisensory signals and a related illusory change in self-location and global self-identification. Since the vestibular system is crucially involved in self-location (see section 4.5), we suggest that some interaction effects between altered self-location and pain may be mediated by the vestibular system.[11] Interestingly, galvanic and caloric stimulation, which also induce illusory changes in self-location, increase pain thresholds in healthy participants (Ferrè et al. 2013). This result and several clinical observations suggest an interplay between vestibular processes, nociceptive processes, and the sense of the bodily self (André et al. 2001; Balaban 2011; Gilbert et al. 2014; McGeoch et al. 2008; Ramachandran et al. 2007).

These interactions are likely to rely on multimodal areas in the insular cortex. Intracranial electrical stimulations of the posterior insula in conscious epileptic patients revealed nociceptive representations with a somatotopic organization (Mazzola et al. 2009; Ostrowsky et al. 2002). Functional neuroimaging studies in healthy participants also demonstrated that painful stimuli (usually applied to the hand or foot) activate the operculo-insular complex (Baumgartner et al. 2010; Craig 2009; Kurth et al. 2010; Mazzola et al. 2012; zu Eulenburg et al. 2013). It has to be noted that vestibulo-somesthetic convergence may also exist in thalamic nuclei such as the ventroposterior lateral nucleus, known to receive both somatosensory and vestibular signals (Lopez & Blanke 2011). The parabrachial nucleus of the brainstem is also a region where vestibular and nociceptive signals converge, as shown by noxious mechanical and thermal cutaneous stimulations (Balaban 2004; Bester et al. 1995). The parabrachial nucleus is further strongly interconnected with the insula and amygdala and may control some autonomic manifestations of pain (Herbert et al. 1990). Furthermore, a recent fMRI study revealed an overlap between brain activations caused by painful stimuli and by artificial vestibular stimulation in the anterior insula (zu Eulenburg et al. 2013), a structure that has been proposed to link the homeostatic evaluation of the current state of the bodily self to broader social and motivational aspects (Craig 2009). We speculate that such association could explain why illusory changes in self-location during vestibular stimulation or during full-body illusions decrease pain thresholds.

4.1.3 Interoception

Visceral signals and their cortical representation—often referred to as interoception—are thought to play a core role in giving rise to a sense of self (e.g., Seth 2013). It has been proposed that visceral signals influence various aspects of emotional and cognitive processes (e.g., Furman et al. 2013; Lenggenhager et al. 2013; Werner et al. 2014; van Elk et al. 2014) and anchor the self to the physical body (Maister & Tsakiris 2014; Tsakiris et al. 2011). For this reason, various clinical conditions involving disturbed self-representation and dissociative states have been related to abnormal interoceptive processing (Seth 2013, but see also Michal et al. 2014 for an exception). Further evidence that interactions of exteroceptive with interoceptive signals play a role in building a self-representation comes again from research using bodily illusions in healthy participants. Two recent studies introduced an interoceptive version of the rubber hand illusion (Suzuki et al. 2013) and the full-body illusion (Aspell et al. 2013). In both cases, a visual cue on the body part/full body was presented in synchrony/asynchrony with the participant’s own heartbeat. Synchrony increased self-identification with the virtual hand or body and modified the experience of self-location, thus suggesting a modulation of these components through interoceptive signals.

Vestibular processing in the context of such interoceptive bodily illusions has not yet been studied. Yet, we would like to emphasize the important interactions between the vestibular system and the regulation of visceral and autonomic functions at both functional and neuroanatomical levels (review in Balaban 1999). As mentioned earlier, the coding of body orientation in space relies on otolithic information signaling the head orientation with respect to gravity. Self-orientation with respect to gravity also requires that the brain integrates these vestibular signals with information from gravity receptors in the trunk (e.g., visceral signals from kidneys and blood vessels) (Mittelstaedt 1992; Mittelstaedt 1996; Vaitl et al. 2002). Other examples of interactions between the vestibular system and autonomic regulation come from the vestibular control of blood pressure, heart rate, and respiration (Balaban 1999; Jauregui-Renaud et al. 2005; Yates & Bronstein 2005). Blood pressure, for instance, needs to be adapted as a function of body position in space and the vestibular signals are crucially used to regulate the baroreflex. Vestibular-mediated symptoms of motion sickness such as pallor, sweating, nausea, salivation, and vomiting are also very well-known and striking examples of the vestibular influence on autonomic functions.

At the anatomical level, there is a large body of data showing that vestibular information projects to several brain structures involved in autonomic regulation, including the parabrachial nucleus, nucleus of the solitary tract, paraventricular nucleus of the hypothalamus, and the central nucleus of the amygdala. Important research has been conducted in the monkey and rat parabrachial nucleus as this nucleus contains neurons responding to natural vestibular stimulation (McCandless & Balaban 2010) and is involved in the ascending pain pathways and cardiovascular pathways to the cortex and amygdala (Bester et al. 1995; Feil & Herbert 1995; Herbert et al. 1990; Jasmin et al. 1997; Moga et al. 1990). The parabrachial nucleus receives projections from several cortical regions, including the insula, as well as from the hypothalamus and amygdala (Herbert et al. 1990; Moga et al. 1990). Accordingly, the parabrachial nucleus should be a crucial brainstem structure for basic aspects of the self as it is a place of convergence for nociceptive, visceral, and vestibular signals.

While research on the effects of vestibular stimulation on interoceptive awareness is still missing, we propose that artificial vestibular stimulation might be a particularly interesting means to manipulate interoception and investigate its influence on the sense of a bodily self.

4.2 Body schema and body image

Here, we propose that vestibular signals are not only important for the interpretation of basic somatosensory (tactile, nociceptive, interoceptive) processes, but as a consequence also contribute to body schema and body image. Body schema and body image are different types of models of motor configurations and body metric properties, including the size and shape of body segments (e.g., Gallagher 2005; de Vignemont 2010; Berlucchi & Aglioti 2010; Longo & Haggard 2010). Although body schema and body image are traditionally thought to be of mostly proprioceptive and visual origin, respectively, a vestibular contribution was already postulated over a century ago (review in Lopez 2013). Pierre Bonnier (1905) described several cases of distorted bodily perceptions in vestibular patients and coined the term “aschématie” (meaning a “loss” of the schema) to describe these distorted perceptions of the volume, shape, and position of the body. Paul Schilder (1935) also noted distorted body schema and image in vestibular patients claiming for example that their “neck swells during dizziness,” “extremities had become larger,” or “feet seem to elongate.” The contribution of vestibular signals to mental body representations has been recognized more recently by Jacques Paillard. He proposed that “the ubiquitous geotropic constraint [i.e., gravitational acceleration, which is detected and coded by vestibular receptors] dominates the [body-, world-, object- and retina-centered] reference frames that are used in the visuomotor control of actions and perceptions, and thereby becomes a crucial factor in linking them together” (Paillard 1991, p. 472). According to Paillard, gravity signals would help merge and give coherence to the various reference frames underpinning action and perception.

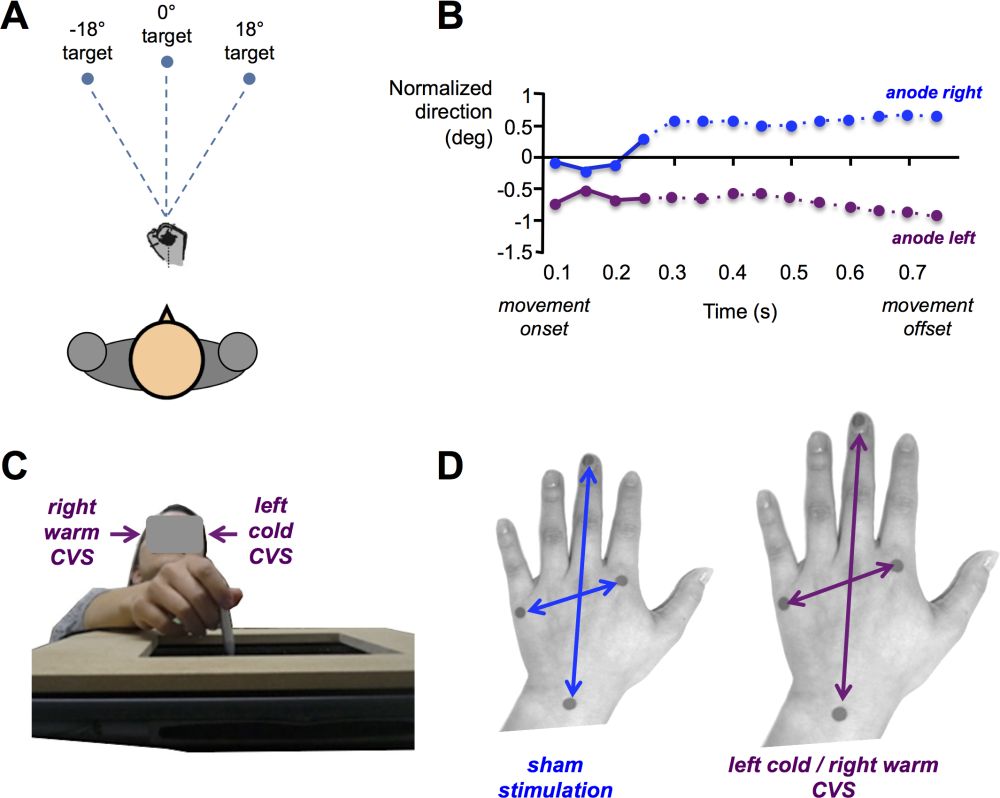

Because humans have evolved under a constant gravitational field, human body representations are strongly shaped by this physical constraint. In particular, grasping and reaching movements are constrained by gravito-inertial forces and internal models of gravity (Indovina et al. 2005; Lacquaniti et al. 2013; McIntyre et al. 2001). Thus, the body schema and action potentialities must take into account signals from the otolithic sensors. For example, when a subject is instructed to reach a target while his entire body is rotated on a chair, the body rotation generates Coriolis and centrifugal forces deviating the hand. Behavioural studies demonstrate that vestibular signals generated during whole-body rotations are used to correct the hand trajectory (Guillaud et al. 2011). Other studies demonstrate that vestibular signals continuously update the body schema during hand actions. Bresciani et al. (2002) asked participants to point to previously memorized targets located in front of them (figure 5A). At the same time, participants received bilateral galvanic vestibular stimulation, with the anode on one side and the cathode on the other side. The data indicate that the hand was systematically deviated toward the side of anodal stimulation (figure 5B). It is important to note that galvanic vestibular stimulation is known to evoke illusory body displacements in the frontal plane and thus modifies the perceived self-location (Fitzpatrick et al. 2002; see also section 4.5). One possible interpretation of the change in hand trajectory during the pointing movement was that it compensated for an “apparent change in the spatial relationship between the target and the hand,” evoked by the vestibular stimulation (Bresciani et al. 2002). Thus, vestibular signals are used to control the way we act and interact with objects in the environment.

After having established the contribution of vestibular signals to hand location and motion, we shall describe the role of vestibular signals in the perception of the body’s metric properties (the perceived shape and size of body segments). During parabolic flights, known to create temporary weightlessness and thus mimic a deafferentation of the otolithic vestibular sensors, Lackner (1992) reported cases of participants experiencing a “telescoping motion of the feet down and the head up internally through the body,” that is, an inversion of their body orientation. Experiments conducted on animals born and raised in hypergravity confirm an influence of vestibular signals on body representations. In these animals, changes in the strength of the gravitational field permanently disorganized the somatosensory maps recorded in their primary somatosensory cortex (Zennou-Azogui et al. 2011).

Experimental evidence of a vestibular contribution to the coding of body metric properties comes from the application of stimulation in healthy participants. In a recent study, Lopez et al. (2012) showed that caloric vestibular stimulation modified the perceived size of the body during a proprioceptive judgment task (figure 5C). Participants had their left hand palm down on a table. Above the left hand, there was a digitizing tablet on which participants were instructed to localize four anatomical targets enabling the calculation of the perceived width and length of the left hand. While participants pointed repeatedly to these targets, they received bilateral caloric vestibular stimulation known to stimulate the right cerebral hemisphere in which the left hand is mostly represented (e.g., warm air in the right ear and cold air in the left ear). The results showed that in comparison to a control stimulation (injection of air at 37°C in both ears), in the stimulation condition the left hand appeared significantly enlarged (figure 5D), showing that vestibular signals can modulate internal models of the body.

Figure 5: Influence of vestibular signals on motor control and perceived body size. (A) Pointing task toward memorized targets. Participants received binaural galvanic vestibular stimulation as soon as they initiated the hand movement (with eyes closed). (B) Deviation of the hand trajectory towards the anode (modified after Bresciani et al.2002). (C) Proprioceptive judgment task used to estimate the perceived size of the left hand. Participants were tested blindfolded and used a stylus hold in their right hand to localize on a digitizing tablet four anatomical landmarks corresponding to the left hand under the tablet. (D) Illustration of the perception of an enlarged hand during caloric stimulation activating the right cerebral hemisphere (modified after Lopez et al.2012).

Figure 5: Influence of vestibular signals on motor control and perceived body size. (A) Pointing task toward memorized targets. Participants received binaural galvanic vestibular stimulation as soon as they initiated the hand movement (with eyes closed). (B) Deviation of the hand trajectory towards the anode (modified after Bresciani et al.2002). (C) Proprioceptive judgment task used to estimate the perceived size of the left hand. Participants were tested blindfolded and used a stylus hold in their right hand to localize on a digitizing tablet four anatomical landmarks corresponding to the left hand under the tablet. (D) Illustration of the perception of an enlarged hand during caloric stimulation activating the right cerebral hemisphere (modified after Lopez et al.2012).

4.3 Body ownership

Correct self-attribution of body parts and self-identification with the entire body relies on successful integration of multisensory information as evidenced by various bodily illusions in healthy participants (e.g., Botvinick & Cohen 1998; Lenggenhager et al. 2007; Petkova & Ehrsson 2008). So far there is only little evidence of a vestibular contribution to the sense of body ownership. Bisiach et al. (1991) described a patient with a lesion of the right parieto-temporal cortex who suffered from somatoparaphrenia, claiming that her left hand did not belong to her. In this patient, caloric vestibular stimulation transiently restored normal ownership for her left hand. Similarly, Lopez et al. (2010) applied galvanic vestibular stimulation to participants experiencing the rubber hand illusion and showed that the vestibular stimulation increased the feeling of ownership for the fake hand. The authors have linked such interaction between the vestibular system, multisensory integration, and body ownership to overlapping cortical areas in temporo-parietal areas and the posterior insula. No study has so far investigated the effect of vestibular stimulation on full-body ownership. Yet, reports from patients with acute vestibular disturbances as well as reports from healthy participants during caloric vestibular stimulation (Lopez 2013; Sang et al. 2006) suggest that full-body ownership might also be modified by artificial vestibular stimulation or vestibular dysfunctions. Given the importance of the vestibular system in more global aspects of the bodily self, we predict that vestibular stimulation would influence ownership even stronger in a full-body illusion than in a body-part illusion set-up.

4.4 The acting self: Sense of agency

As mentioned earlier, the sense of being the agent of one’s own actions is another crucial aspect of the sense of self. Agency relies on sensorimotor mechanisms comparing the motor efference copy with the sensory feedback from the movement, and on other cognitive mechanisms such as the expectation of a self-generated movement (Cullen 2012; Jeannerod 2003, 2006). While no study so far has directly investigated vestibular mechanisms of the sense of agency, recent progress in this direction has been made in a study investigating full-body agency during a goal-directed locomotion task (Kannape et al. 2010). Participants walked toward a target and observed their motion-tracked walking patterns applied to a virtual body projected on a large screen in front of them. Various angular biases were introduced between their real locomotor trajectory and that projected on the screen. Comparable to the classical experiments assessing agency for a body part (Fourneret & Jeannerod 1998), these authors investigated the discrepancy up to which the motion of the avatar showed on the screen was still perceived as their own. During this task, the brain does not only detect visuo-motor coherence but also vestibulo-visual coherence, and self-attribution of the seen movements is thus likely to depend on vestibular signal processing. In the following we present an example of neural coding underlying an aspect of the sense of agency in several structures of the vestibulo-thalamo-cortical pathways.

In the vestibular system, peripheral organs encode in a similar way head motions for which the subject is or is not the agent.[12] Thus, vestibular organs generate similar signals during an active rotation of the head (i.e., the person is the agent of the action) or during a passive, externally imposed, rotation of the head (i.e., the person is passively moved while sitting on a rotating chair). It is important for the central nervous system to establish whether afferent vestibular signals are generated by active or passive head movements, and this is done at various levels. Electrophysiological studies conducted in monkeys have revealed that some vestibular nuclei neurons were silent, or had a strongly reduced firing rate, during active head rotations, whereas their firing rate was significantly modulated by passive head rotations. This indicates that vestibular signals generated by active head rotations were suppressed or attenuated. This suppression of neural responses was found in the vestibular nuclei complex (Cullen 2011; Roy & Cullen 2004), thalamus (Marlinski & McCrea 2008b) and cerebral cortex, for example in areas of the intraparietal sulcus (Klam & Graf 2003, 2006). Several studies were conducted to determine which signal might induce such suppression. Roy & Cullen (2004) suggested that a motor efference copy was used. They showed that the suppression occurred “only in conditions in which the activation of neck proprioceptors matched that expected on the basis of the neck motor command”, suggesting that “vestibular signals that arise from self-generated head movements are inhibited by a mechanism that compares the internal prediction of the sensory consequences by the brain to the actual resultant sensory feedback” (p. 2102). In conclusion, as early as the first relay along the vestibulo-thalamo-cortical pathways, neural mechanisms have the capacity to distinguish between the consequences of active and passive movements on vestibular sensors. Given this evidence, we suggest an important contribution of the vestibular system to the sense of agency in general and to full-body agency in particular.

4.5 The spatial self: Self-location

4.5.1 Behavioural studies in humans

Self-location is the experience of where “I” am located in space and is one of the (if not the) crucial aspects of the bodily self (Blanke 2012). Recently, self-location has been systematically investigated in human behavioural and neuroimaging studies using multisensory conflicts (Ionta et al. 2011; Lenggenhager et al. 2007; Lenggenhager et al. 2009; Pfeiffer et al. 2013). While we usually experience ourselves as located within our own bodily borders at one single location in space, the sense of self-location can be profoundly disturbed in psychiatric and neurological conditions, most prominently during out-of-body experiences (Bunning & Blanke 2005). Based on findings in neurological patients that revealed a frequent association between vestibular illusions (floating in the room, sensation of lightness or levitation) and out-of-body experiences, Blanke and colleagues proposed that the illusory disembodied self-location was due to a dis-integration of vestibular signals with signals from the personal (tactile and proprioceptive signals) and extrapersonal (visual) space (Blanke et al. 2004; Blanke & Mohr 2005; Blanke 2012; Lopez et al. 2008). The authors proposed that this multisensory disintegration is mostly a result of abnormal neural activity in the temporo-parietal junction (Blanke et al. 2005; Blanke et al. 2002; Heydrich & Blanke 2013; Ionta et al. 2011). In this section, we review experimental data in healthy participants that may account for the tight link between vestibular disorders and illusory or simulated changes in self-location. While the most direct evidence of such a link comes from the finding that artificial stimulation of the vestibular organs induces an illusory change in self-location[13] (Fitzpatrick & Day 2004; Fitzpatrick et al. 2002; Lenggenhager et al. 2008), we focus on three experimental set-ups that have been used to alter the experience of self-location in healthy participants.

4.5.1.1 Illusory change in self-location during full-body illusions

Full-body illusions have increasingly been used to study the mechanisms underlying self-location (see Blanke 2012, for a review). No study has so far investigated the influence of artificial vestibular stimulation on such illusions. Nevertheless, there is some experimental evidence suggesting a vestibular involvement in illusory changes in self-location. While the initial full-body illusion was described in a standing position (Lenggenhager et al. 2007), the paradigm has later been adapted to a lying position (Ionta et al. 2011; Lenggenhager et al. 2009; Pfeiffer et al. 2013), mainly because the frequency of spontaneous out-of-body experiences is higher in lying position than in standing or sitting positions (Green 1968). It has been speculated that this influence of the body position on the sense of embodiment is related to the decreased sensitivity of otolithic vestibular receptors and decreased motor and somatosensory signals in the lying position (Pfeiffer et al. 2013). We hypothesized that under such conditions of reduced vestibular (and proprioceptive) information, visual capture is enhanced in situations of multisensory conflict, thus resulting in a stronger change in self-location during the full-body illusion. So far, the full-body illusion has not been directly compared in standing versus lying positions. However, the application of visuo-tactile conflicts in a lying position not only alters self-location but also evokes sensations of floating (Ionta et al. 2011; Lenggenhager et al. 2007). This finding hints toward a reweighting of visual, tactile, proprioceptive, and vestibular information during the illusion, plausibly in the temporo-parietal junction and human PIVC. In line with this finding, the changes in self-location and perspective have been associated with individual perceptual styles of visual-field dependence (Pfeiffer et al. 2013), i.e., weighting of visual as compared to vestibular information in a subjective visual vertical task, suggesting an individually different contribution and weighting of the various senses for the construction of the bodily self (for a similar finding regarding the rubber hand illusion, see David et al. 2014).

4.5.1.2 Mental own-body transformation and perspective taking

Another way to investigate bodily self-consciousness has been to use experimental paradigms requiring participants to put themselves “into the shoes” of another individual, that is to mentally simulate an external self-location (own-body, egocentric, mental transformation tasks) and a third-person visuo-spatial perspective. Typically, participants are instructed to make left-right judgments about a body, for example, to judge whether this other shown person is wearing a glove on his right or left hand (Blanke et al. 2005; Lenggenhager et al. 2008; Parsons 1987; Schwabe et al. 2009). Other tasks require that participants adopt the visual perspective of another person to decide whether a visual object is to the right or left of the other person (David et al. 2006; Lambrey et al. 2012; Vogeley & Fink 2003). Early studies have shown that the time needed for own-body mental transformations correlates with the distance or angle between the participant’s position in the physical space and the position to be simulated (Parsons 1987). It is largely admitted that own-body mental transformation is an “embodied” mental simulation that can be influenced by various sensorimotor signals from the body (e.g., Kessler & Thomson 2010). In line with this view, various experiments demonstrated that the actual body position influences mental own-body transformation of body parts (e.g., Ionta et al. 2012). Importantly, next to proprioceptive and motor mechanisms, visuo-spatial perspective taking and own-body mental transformation also require the integration of vestibular information (active or passive body motion). Thus, while most of this research looked at how body parts’ posture (e.g., of the hand) influences mental own-body (part) transformation, some recent research investigated how mental own-body transformation is influenced by vestibular cues (Candidi et al. 2013; Dilda et al. 2012; Falconer & Mast 2012; Lenggenhager et al. 2008; van Elk & Blanke 2014). All these studies revealed that vestibular signals influence mental (full) own-body transformation, confirming again the influence of the vestibular system in the sense of self-location and perspective taking.[14]

4.5.1.3 Change in self-location and the feeling of presence

The development of immersive virtual environments has launched a powerful research area where the mechanisms of self-location can be investigated and manipulated by the feeling of presence. The term “presence” stems from virtual reality technologies and commonly refers to the feeling of being immersed (“being there”) in the virtual environment. Yet, it has been argued that “presence” also reflects a more general and basic state of consciousness (Riva et al. 2011). The study of presence has thus been suggested to provide useful tools to study (self-)consciousness, with the advantage of precise experimental control (Sanchez-Vives & Slater 2005).

Similar to previously mentioned full-body illusions, a participant who is immersed in a virtual environment receives contradicting multisensory information about his or her self-location: while visual information suggests that s/he is located in a virtual world, proprioceptive information suggests that s/he is located in the real world, for example, by indicating a different body position between the physical body and the avatar. Furthermore, and contrary to the full-body illusion, the visual information often indicates that the participant is moving, whereas the proprioceptive and vestibular information suggests that he or she is sitting still. The compelling feeling of presence in virtual environments indicates that participants rely strongly on visual cues. Of note, some authors have proposed that a sort of bi-location is possible in such a situation, by which one feels to a certain degree being localized simultaneously in both the real and virtual environments (Furlanetto et al. 2013; Wissmath et al. 2011), which has also been described in a clinical condition called heautoscopy (e.g., Blanke & Mohr 2005; Brugger et al. 1994).

Neuroimaging studies in healthy participants showed that self-identification with—and self-localization at—a position of a virtual avatar seen from a third-person perspective activates the left inferior parietal lobe (Corradi-Dell’acqua et al. 2008; Ganesh et al. 2012). Corroboratively, people who are addicted to video-games show altered processing in a left posterior area of the middle temporal gyrus (Kim et al. 2012). These studies converge in their conclusion that multimodal areas in the temporo-parietal junction are involved in altered self-localization in virtual reality. As mentioned before, the temporo-parietal junction is a main region for vestibular processing. We thus hypothesize that the feeling of presence might be mediated by vestibular signals, which should be directly tested by assessing whether the feeling of presence can be modified by caloric and galvanic vestibular stimulation.

4.5.2 Physiological and vestibular mechanisms of self-location

4.5.2.1 Categories of cells coding self-location and self-orientation

Electrophysiological investigations in rodents have identified three categories of neurons encoding specifically where the animal is located, how its head is oriented, and how the animal moves in its environment (see Barry & Burgess 2014, for a recent review). These neurons are referred to in the literature as “place cells,” “head-direction cells,” and “grid cells”. In rats, place cells have been recorded as early as the 1970s in the hippocampus, and later in the subiculum and entorhinal cortex (O’Keefe & Conway 1978; O’Keefe & Dostrovsky 1971; Poucet et al. 2003). The firing rate of these neurons increases when the animal is located at a specific position within the environment. This activity is strongly modulated by allocentric signals (visual references in the environment) and vestibular signals (Wiener et al. 2002). Place cells have later been identified in several other animal species including mice (McHugh et al. 1996), bats (Ulanovsky & Moss 2007), monkeys (Furuya et al. 2014; Ludvig et al. 2004; Matsumura et al. 1999; Ono et al. 1993) and humans (Ekstrom et al. 2003; Miller et al. 2013). Head-direction cells were first recorded in the rat postsubiculum and later in several nuclei constituting the Papez circuit, such as the dorsal thalamic nucleus and lateral mammillary nuclei (Taube 2007). They were also found in the retrosplenial and entorhinal cortex. Electrophysiological recordings revealed that head-direction cells “discharge allocentrically as a function of the animal’s directional heading, independent of the animal’s location and ongoing behavior” (Taube 2007). Head-direction cells have also been identified in the monkey hippocampus (Robertson et al. 1999). Finally, grid cells have been identified in the rat medial entorhinal cortex, but also in the pre- and parasubiculum (Boccara et al. 2010; Sargolini et al. 2006). Grid cells fire for multiple locations of the animal within its environment. Altogether, these locations form a periodic pattern, or “grid,” spanning the entire surface of the environment. More recently, electrophysiological recordings have shown grid cells in mice (Fyhn et al. 2008), bats (Yartsev et al. 2011) and monkeys (Killian et al. 2012), and even probable homologues of grid cells in the human hippocampus (Doeller et al. 2010; Jacobs et al. 2013).

4.5.2.2 Place cells in the human hippocampus and “virtual” self-location

We can only speculate about the neural mechanisms of place and head-direction specific coding in the human brain. With the non-invasive neuroimaging techniques available to date (fMRI, PET, scalp electroencephalography (EEG), near-infrared spectroscopy (NIRS)), it remains difficult to investigate neural activity of potential human homologues of place cells, head-direction cells and grid cells (for fMRI identification of grid cells, see Doeller et al. 2010). Single-unit recordings can only be achieved during rather rare intracranial EEG carried out for presurgical evaluations of drug refractory epilepsy.

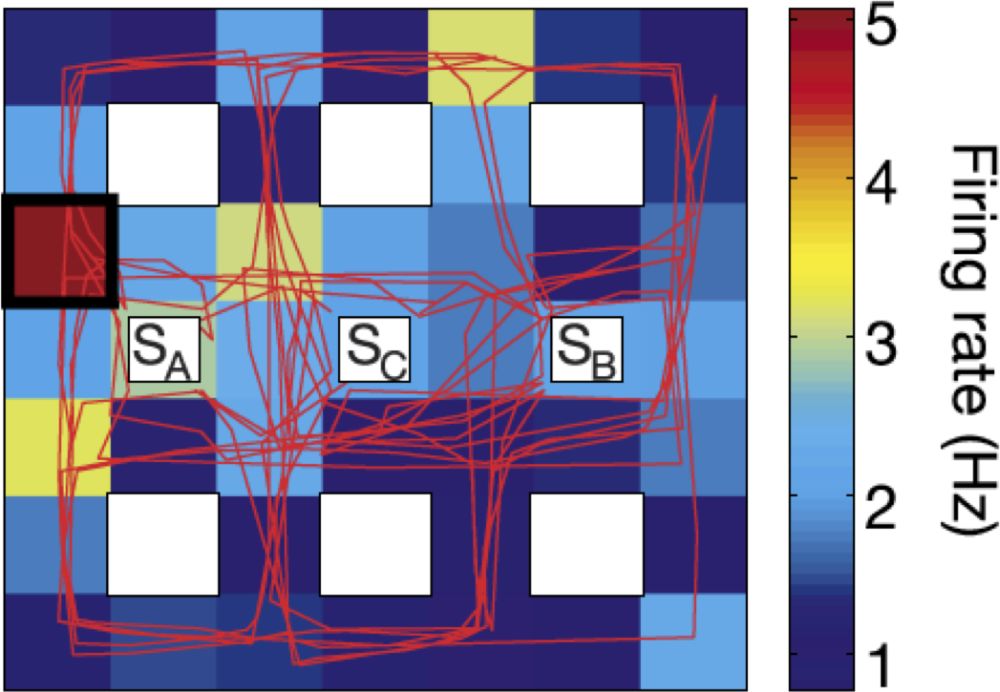

In a seminal intracranial EEG study conducted in 7 epileptic patients, Ekstrom et al. (2003) identified neurons with place selectivity in the hippocampus. Patients were immersed in a virtual environment and played a taxi driver computer game, picking up customers at one location in the virtual town and delivering them to another location of the town. As illustrated in figure 6, a neuron recorded in the right hippocampus had a significantly stronger firing rate when the patient was virtually “located” in the upper left corner than in any other location of the virtual town, showing its place selectivity. The authors found that 24% of neurons recorded in the hippocampus displayed a pattern of place selectivity, a proportion that was significantly larger than in the other brain structures they explored. Using a very similar procedure in a virtual environment in patients with intracranial electrodes, a recent study identified probable grid-like cells in humans (Jacobs et al. 2013). They were predominantly located in the entorhinal cortex and anterior cingulate cortex.

Interestingly, in both studies, patients did not physically move but moved virtually using button presses on a keyboard or a joystick. Nevertheless, the firing rate of these neurons changed as a function of the “virtual” location of the participants within the virtual environment. This observation indicates that both hippocampal “place cells” as well as entorhinal and cingulate “grid-cells” were coding the patient’s location in the virtual word on the basis of allocentric visual signals, rather than the patient’s position in the real world. Although the findings about these properties of the hippocampus have been mostly interpreted in the research field of spatial navigation and memory Burgess & O’Keefe 2003), we make a new proposition that they can also shed light on the neural underpinnings of bodily self-consciousness, especially on how the brain localizes the self both in everyday life as well as in situations of multisensory conflicts.

Figure 6: Map illustrating the firing rate of one cell in the right hippocampal showing a pattern of place selectivity. The rectangular map represents the virtual town explored by the participant using key presses on a keyboard and the red line represents the participant’s trajectory within the virtual town. The nine white boxes indicate the location of buildings in the virtual town (SA, SB, and SC represent three shops that were “visited” by the participant). Colors from blue to red in the background represent the firing rate of the hippocampal cell as a function of the participant’s location in the virtual town. This neuron displays a significantly higher firing rate when the participant was located in the left upper part of the virtual environment (location showed by a black square). Reproduced from Ekstrom et al. (2003).

Figure 6: Map illustrating the firing rate of one cell in the right hippocampal showing a pattern of place selectivity. The rectangular map represents the virtual town explored by the participant using key presses on a keyboard and the red line represents the participant’s trajectory within the virtual town. The nine white boxes indicate the location of buildings in the virtual town (SA, SB, and SC represent three shops that were “visited” by the participant). Colors from blue to red in the background represent the firing rate of the hippocampal cell as a function of the participant’s location in the virtual town. This neuron displays a significantly higher firing rate when the participant was located in the left upper part of the virtual environment (location showed by a black square). Reproduced from Ekstrom et al. (2003).

As mentioned earlier, the experience of self-location can be manipulated by creating conflicts between visual cues about the location of one’s own body (or an avatar) in the external word and tactile or other somatosensory signals (Ehrsson 2007; Lenggenhager et al. 2011; Lenggenhager et al. 2009; Lenggenhager et al. 2007). These visuo-tactile conflicts can induce the perception of being located closer to the avatar. The recent use of these visual-tactile conflicts during fMRI recordings showed that the apparent changes in self-location and visuo-spatial perspective were related to signal changes in the temporo-parietal junction, not in the hippocampus (Ionta et al. 2011). It is not clear whether hippocampal place cells’ activity can be recorded with the large-scale, non-invasive functional neuroimaging techniques available. Yet, we predict that visuo-tactile conflicts, by modifying the experienced self-location, should also modify the neural activity of place cells and grid cells and their vestibular modulation (see next section), as showed during navigation in immersive virtual environments (Ekstrom et al. 2003; Jacobs et al. 2013). Future research using intracranial EEG recordings in epileptic patients should endeavour to study directly the relation between place cell activity and the experience of human self-location in situations of conflicting multisensory information.

4.5.2.3 Vestibular signals and place cells

In this section, we emphasize the contribution of vestibular signals to the neural coding of self-location in the hippocampus. As mentioned above, the firing rate of place cells is strongly modulated by allocentric signals, a finding replicated in several studies in rodents (Wiener et al. 2002). Vestibular signals have also been shown to modulate the firing pattern of the hippocampal place cells, which is necessary when animals navigate in darkness (O’Mara et al. 1994).

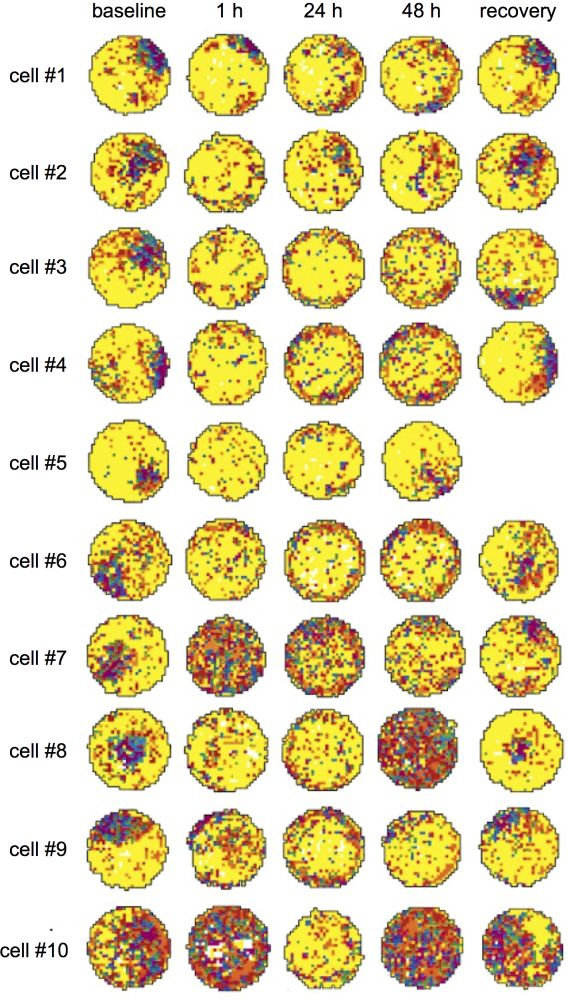

For example, Stackman et al. (2002) temporarily inactivated the vestibular system of rats using bilateral transtympanic injections of tetrodotoxin (TTX). TTX abolishes almost immediately neural activity in the vestibular nerve, producing a temporary vestibular deafferentation, mimicking the situation of patients with a bilateral vestibular loss. Figure 7 illustrates changes in the firing rate of ten hippocampal neurons before and after TTX injection. Before TTX injection, hippocampal neurons displayed a typical pattern of place selectivity when the animal explored the circular environment. A major finding of this study was that as early as one hour after vestibular deafferentation, the location-specific activity of the same hippocampal neurons was strongly disturbed. In particular, the vestibular deafferentation reduced the spatial coherence and spatial information content that usually characterize the place cells. These disorders remained between thirty-six and seventy-two hours after TTX injection, despite the fact that the rats continued to explore their circular environment and had normal locomotor activity twelve hours after TTX injection. These results indicate that place cells are continuously integrating vestibular signals to estimate one’s location within the physical environment and that vestibular signals strongly contribute to one of the most important neural mechanisms of self-location.

The activity of place cells or grid cells has not been recorded after vestibular deafferentation in humans. Nevertheless the neural consequences of vestibular lesions on place cells (Stackman et al. 2002) and head-direction cells (Stackman & Taube 1997) in animal models corroborate the effects of unilateral and bilateral vestibular lesions in humans. Patients with vestibular disorders may experience spatial disorientation as measured during path completion tasks (Glasauer et al. 1994) and navigation in virtual environments (Hüfner et al. 2007; Péruch et al. 1999). We propose that vestibular disorders, by disorganizing the firing pattern of place cells in the human hippocampus (and in other brain regions containing place cells) may strongly disturb the sense of self-location and thus the coherent sense of self, which could eventually even lead to disturbance of the usually very stable feeling of being located at a single place at a given time (see the strong disorganization of the place cells activity in figure 7). Another striking consequence of a bilateral vestibular loss is the induced atrophy of the hippocampus, whose volume is decreased by about seventeen percent (Brandt et al. 2005). Altogether, these data show that one neural mechanism of bodily self-location (place cells encoding of the body location in the environment) strongly relies on vestibular signals.

Figure 7: Modification of spatial selectivity of ten hippocampal place cells before and after inactivation of the vestibular apparatus with TTX injection. The colors ranging from yellow to purple represent the increase in firing rate of the place cells as a function of the location of the rat in the circular arena. From Stackman et al. (2002).

Figure 7: Modification of spatial selectivity of ten hippocampal place cells before and after inactivation of the vestibular apparatus with TTX injection. The colors ranging from yellow to purple represent the increase in firing rate of the place cells as a function of the location of the rat in the circular arena. From Stackman et al. (2002).

4.6 The socially embedded self

An important branch of research suggests that the neural mechanisms that dynamically represent multisensory bodily signals not only give rise to a sense of self, but also to the sense of others. The emerging field of social neuroscience has investigated both in animals and humans how the perception of another person modifies neural activity in body-related, sensorimotor neural processing and vice versa.[15] “Sensorimotor sharing” and related mechanisms such as emotional contagion, sensorimotor resonance, or mimicry are thought to enable individuals to understand others’ emotions, intentions, and actions and are thus fundamental for our social functioning. This line of research has evolved from an influential electrophysiological study that identified mirror neurons activated both when a monkey was performing a (body part) action and when observing someone else executing the same action (Gallese et al. 1996; Rizzolatti et al. 1996). A human mirror-neuron-like system has been suggested based on neuroimaging studies that revealed similar brain activations when acting and when observing the same action being executed by another person (e.g., Rizzolatti & Craighero 2004). Importantly, similar mechanisms were found in various sensory systems as further experiments have shown common neural activity when experiencing and observing pain (Lamm et al. 2011, for a recent meta-analysis), when being touched and observing someone being touched (Keysers et al. 2004), and when inhaling disgusting odorants and observing the face of someone inhaling disgusting odorants (Wicker et al. 2003)

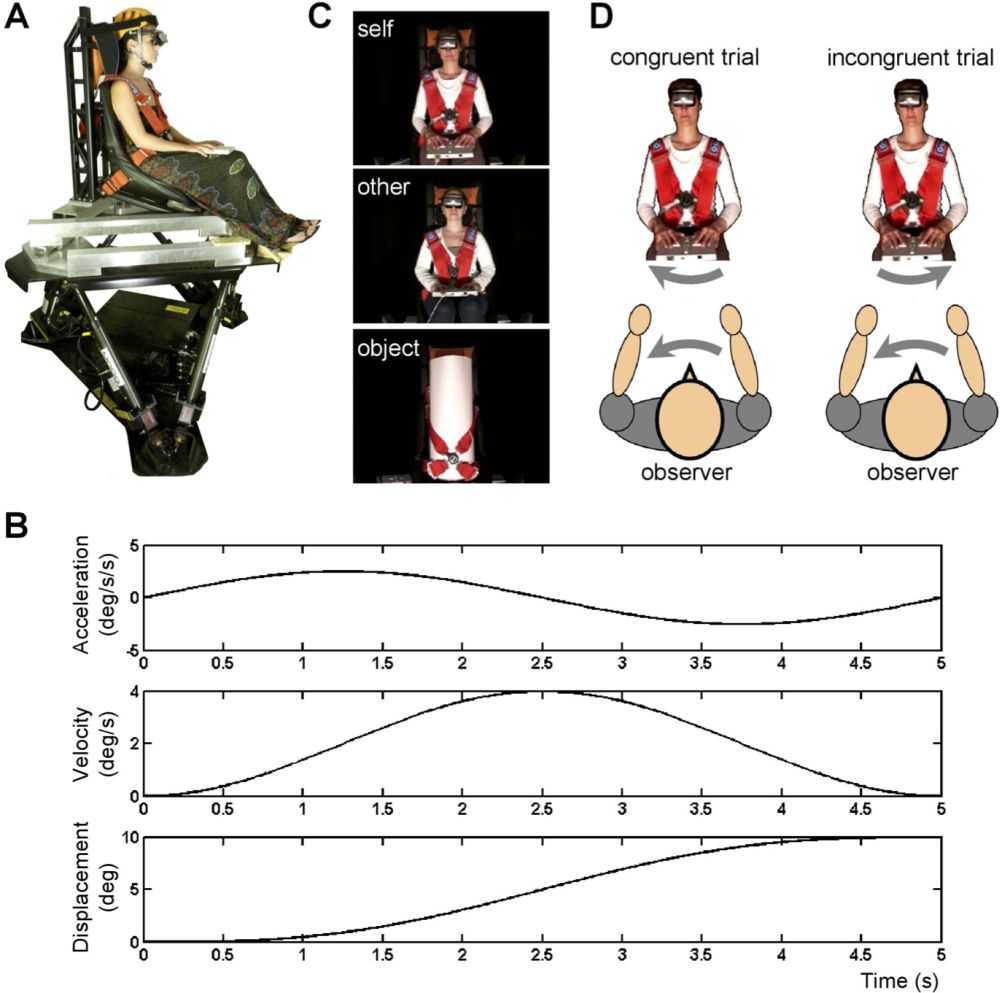

No human neuroimaging study so far has investigated brain mechanisms when experiencing a vestibular sensation and seeing somebody experiencing a vestibular sensation (e.g., being passively moved in space). Yet, recent findings from a behavioural study in humans suggest that the observation of another person’s whole-body motion might influence vestibular self-motion perception (Lopez et al. 2013; see figure 8). In this study, participants were seated on a whole-body motion platform and passively rotated around their main vertical body axis. They were asked in a purely vestibular task to indicate in which direction (clockwise vs. counter-clockwise) they were rotated while looking at videos depicting their own body, another body, or an object rotating in the same plane. The spatial congruency between self-motion and the item displayed in the video was manipulated by creating congruent trials (specular congruency) and incongruent trials (non-specular congruency). The results indicated self-motion perception was influenced by the observation of videos showing passive whole-body motion. Participants were faster and more accurate when the motion depicted in the video was congruent with their own body motion. This effect depended on the agent depicted in the video, with significantly stronger congruency effects for the “self” videos than for the “other” videos, which is in line with the effects previously reported for the tactile system (Serino et al. 2009; Serino et al. 2008). Lopez et al. (2013) speculated on the existence of a vestibular mirror neuron system in the human brain, that is a set of brain regions activated both by vestibular signals and by observing bodies being displaced. As noted earlier, vestibular regions show important patterns of visuo-vestibular convergence in the parietal cortex, which could underlie such effects (Bremmer et al. 2002; Grüsser et al. 1990b).

On the basis of these findings as well as the data presented above on the importance of vestibular processes in spatial, cognitive, and social perspective-taking, we propose that the vestibular system is not only involved in shaping and building the perception of a bodily self but is also involved in better understanding and predicting another person’s (full-body) action through sensorimotor resonance (see also Deroualle & Lopez 2014).

Figure 8: Experimental setup used to measure the influence of body movement observation on whole body self-motion perception. (A) Self-motion perception was tested in twenty-one observers seated on a motion platform. Motion stimuli were yaw rotations lasting for 5s with peak velocity of 0.1°/s, 0.6°/s, 1.1°/s, and 4°/s. (B) Example of a motion profile consisting of a single cycle sinusoidal acceleration. Acceleration, velocity, and displacement are illustrated for the highest velocity used at 4°/s. (C) Observers wore a head-mounted display through which 5-s videos were presented, depicting their own body, the body of another participant matched for gender and age, or an inanimate object. (D) During congruent trials, the observers and the object depicted in the video were rotated in the same direction (specular congruency). Reproduced from Lopezet al. (2013).

Figure 8: Experimental setup used to measure the influence of body movement observation on whole body self-motion perception. (A) Self-motion perception was tested in twenty-one observers seated on a motion platform. Motion stimuli were yaw rotations lasting for 5s with peak velocity of 0.1°/s, 0.6°/s, 1.1°/s, and 4°/s. (B) Example of a motion profile consisting of a single cycle sinusoidal acceleration. Acceleration, velocity, and displacement are illustrated for the highest velocity used at 4°/s. (C) Observers wore a head-mounted display through which 5-s videos were presented, depicting their own body, the body of another participant matched for gender and age, or an inanimate object. (D) During congruent trials, the observers and the object depicted in the video were rotated in the same direction (specular congruency). Reproduced from Lopezet al. (2013).